Wed 07 June 2023:

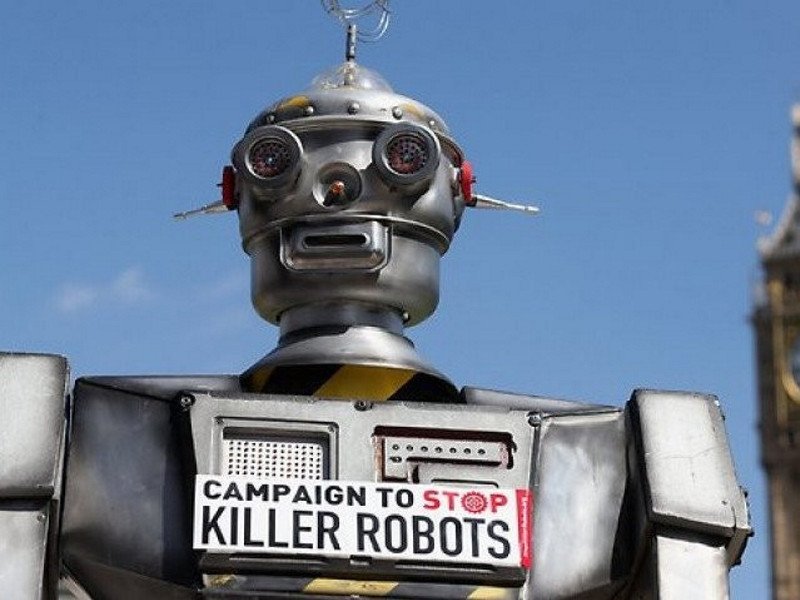

Prominent figures from companies such as Google, DeepMind, and OpenAI have joined Clifford in calling for the risks associated with AI to be treated as a global priority due to the potential threat they pose to humanity. Elon Musk, CEO of Tesla and SpaceX, has also voiced concerns about the potential harm caused by “Terminator” style robots.

Clifford, who chairs the government’s Advanced Research and Invention Agency, described the world as being at a tipping point, with even short-term risks being deeply concerning.

“The truth is, no one knows. There are a very broad range of predictions among AI experts. I think two years will be at the very most sort of bullish end of the spectrum,” Clifford said

Clifford later clarified his comments in a thread on Twitter, saying: “Short and long-term risks of AI are real and it’s right to think hard an urgently about mitigating them, but there’s a wide range of views and a lot of nuance here, which it’s important to be able to communicate.”

He said that he had told TalkTV that there are “very broad ranges of predictions among AI experts” on how long it would be before machines could become too powerful for humans to control.

Speaking on the First Edition show, Clifford stated that the creation of AI that surpasses human intelligence without adequate control mechanisms presents various risks, both presently and in the future. He believes that policymakers should prioritize addressing these risks.

While acknowledging that the risk of AI turning against humans may sound like the plot of a movie, Clifford emphasized that it is not zero.

He highlighted the striking rise in AI capabilities and the potential dangers posed by bio-weapons and cyber threats, which could result in the loss of many lives within the expected advancements of AI over the next two years.

Furthermore, Clifford mentioned that the signatories of the letter openly admit their lack of understanding regarding the behaviors exhibited by machines, which he finds quite terrifying.

Clifford, said he is agreed that a new global regulator needed to be put in place.

He added: “It’s certainly true that if we try and create artificial intelligence that is more intelligent than humans and we don’t know how to control it, then that’s going to create a potential for all sorts of risks now and in the future. So I think there’s lots of different scenarios to worry about but I certainly think it’s right that it should be very high on the policy makers’ agendas”.

Asked what the “tipping point” might be, Clifford said: “I think there’s lots of different types of risks with AI and often in the industry we talk about near-term and long-term risks and the near-term risks are actually pretty scary.

“You can use AI today to create new recipes for bioweapons or to launch large-scale cyber attacks, you know, these are bad things. The kind of existential risk that I think the letter writers were talking about is exactly as you said, they’re talking about what happens once we effectively create a new species, you know an intelligence that is greater than humans.”

Sam Altman, the chief executive of OpenAI which operates ChatGPT, has also called for tougher regulation of the sector, and has said the technology should be handled in the same way as nuclear material.

SOURCE: INDEPENDENT PRESS AND NEWS AGENCIES

______________________________________________________________

FOLLOW INDEPENDENT PRESS:

TWITTER (CLICK HERE)

https://twitter.com/IpIndependent

FACEBOOK (CLICK HERE)

https://web.facebook.com/ipindependent

Think your friends would be interested? Share this story!