Thu 01 February 2024:

Social media platform X has blocked searches for one of the world’s most popular personalties, Taylor Swift, after explicit artificial intelligence images of the singer-songwriter went viral.

The deepfakes flooded several social media sites from Reddit to Facebook. This has renewed calls to strengthen legislation around AI, particularly when it is misused for sexual harassment.

What happened to Taylor Swift?

On Wednesday, AI-generated, sexually explicit images began circulating on social media sites, particularly gaining traction on X. One image of the megastar was seen 47 million times during the approximately 17 hours it was live on X before it was removed on Thursday.

The deepfake-detecting group Reality Defender told The Associated Press news agency that it tracked down dozens of unique images that spread to millions of people across the internet before being removed.

X has banned searches for Swift and queries relating to the photos, instead displaying an error message.

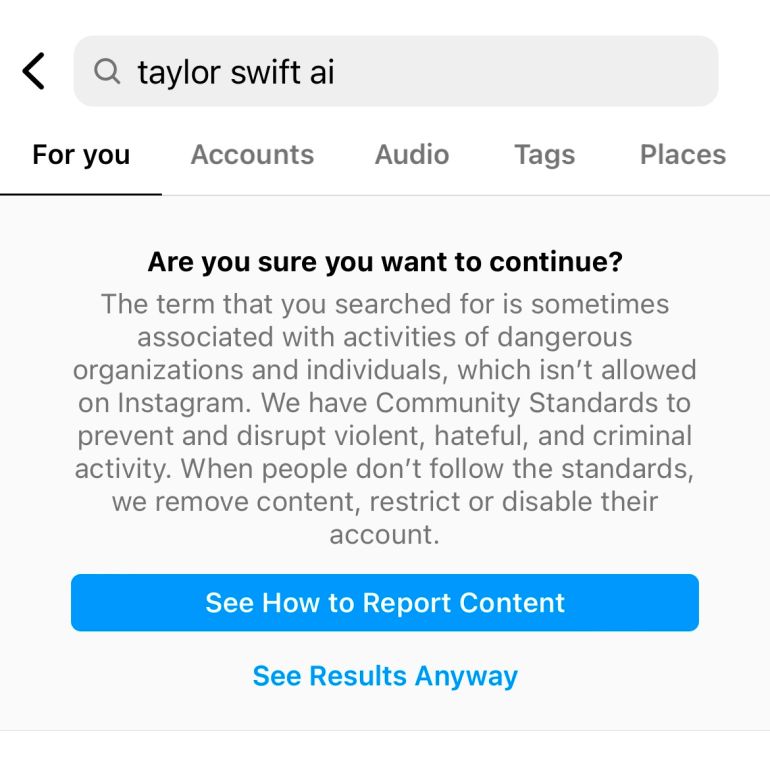

Instagram and Threads, while allowing searches for Swift, display a warning message when specifically searching for the images.

What are platforms like X and AI sites saying?

On Friday, X’s safety account released a statement that reiterated the platform’s “zero-tolerance policy” on posting nonconsensual nude images. It said the platform was removing images and taking action against accounts that violate the policy.

Meta also released a statement condemning the content, adding that it will “take appropriate action as needed”.

“We’re closely monitoring the situation to ensure that any further violations are immediately addressed, and the content is removed,” the company said.

Microsoft, which offers an image-generator based partly on the website DALL-E, said Friday that it was in the process of investigating whether its tool was misused.

Kate Vredenburgh, assistant professor in the Department of Philosophy, Logic and Scientific Method at the London School of Economics, pointed out that social media business models are often built on sharing content and their approach is usually to clean up after such an event.

Swift has not issued a statement about the images. The pop star was seen on Sunday at an NFL game in the United States, cheering on her boyfriend Travis Kelce as his Kansas City Chiefs advanced to the Super Bowl.

Posting Non-Consensual Nudity (NCN) images is strictly prohibited on X and we have a zero-tolerance policy towards such content. Our teams are actively removing all identified images and taking appropriate actions against the accounts responsible for posting them. We're closely…

— Safety (@Safety) January 26, 2024

What is a deepfake and how can it be misused?

Swift is not the first public figure to have been targeted using deepfakes, which are a form of “synthetic media” or virtual media manipulated and modified through artificial intelligence.

Images and videos can be generated from scratch by feeding AI tools with prompts of what to display. Alternatively, the face of someone in a video or image can be swapped out with someone else’s, such as a public figure’s.

Fake videos are commonly made in this way to show politicians endorsing certain statements or people engaging in sexual activities they never engaged in. Recently, in India, sexually explicit deepfakes of actress Rashmika Mandanna went viral on social media, sparking an uproar. A gaming app used a deepfake of cricketing icon Sachin Tendulkar to promote its product. And in the US, former Republican presidential contender Ron DeSantis’s campaign produced deepfakes showing ex-President Donald Trump, who is leading the party’s presidential nomination race, kissing public health specialist Anthony Fauci, a hated figure for many conservatives because of his advocacy for masks and vaccines during the COVID-19 pandemic.

While some deepfakes are easy to identify because of their poor quality, others can be much more difficult to distinguish from a real video. Several generative AI tools such as Midjourney, Deepfakes web and DALL-E are available to users for free or at a low fee.

More than 96 percent of deepfake images currently online are of a pornographic nature, and nearly all of them target women, according to a report by Sensity AI, an intelligence company focused on detecting deepfakes.

Is there any legislation that could protect online users?

Legislation specifically addressing deepfakes varies by country and typically ranges from requiring disclosure of deepfakes to prohibiting content that is harmful or malicious.

Ten states in the US, including Texas, California and Illinois, have criminal laws against deepfakes. Lawmakers are pushing for a similar federal bill or more restrictions on the tool. US Representative Yvette Clarke, a Democrat from New York, has introduced legislation that would require creators to digitally watermark deepfake content.

Sexually explicit deepfakes, when nonconsensual, could be a violation of the country’s broader laws. The US does not criminalise such deepfakes but does have state and federal laws targeting privacy, fraud and harassment.

In 2019, China implemented laws mandating disclosure of deepfake usage in videos and media. In 2023, the United Kingdom made sharing deepfake pornography illegal as part of its Online Safety Act.

South Korea enacted a law in 2020 criminalising the distribution of deepfakes causing harm to the public interest, imposing penalties of up to five years in prison or fines reaching about 50 million won ($43,000) to deter misuse.

In India, the federal government issued an advisory to social media and internet platforms in December to guard against deepfakes that contravene India’s IT rules. Deepfakes themselves are not illegal but, depending on the content, can violate some of India’s information technology rules.

Hesitance around stricter regulation often stems from concerns that it might hold back technological progress.

“It just assumes that we couldn’t make these regulatory or design changes in order to at least significantly reduce this,” Vredenburgh said. Sometimes, the social attitude can also be that such incidents are a price to pay for such tools, which Vredenburgh said marginalises the perspective of the victims.

“It portrays them as like a small minority of society that might be affected for the good of all of us,” she said. “And that’s a very uncomfortable position, societally, for all of us to be in.”

How is the world reacting?

The White House said it is “alarmed” by the images while Swift’s fanbase — called Swifties — mobilised to take action against them.

“While social media companies make their own independent decisions about content management, we believe they have an important role to play in enforcing their own rules to prevent the spread of misinformation and nonconsensual, intimate imagery of real people,” White House Press Secretary Karine Jean-Pierre said at a news briefing.

US lawmakers also expressed the need to introduce safeguards.

Since Wednesday, the singer’s fans have quickly reported accounts and launched a counteroffensive on X with a #ProtectTaylorSwift hashtag to flood it with more positive images of Swift.

“We often rely on users and those that are affected or people who are in solidarity with them in order to do the hard work of pressuring companies,” Vredenburgh said, adding that not everyone can mobilise the same kind of pressure and so relying on outrage to translate to lasting change is still something to worry about.

______________________________________________________________

FOLLOW INDEPENDENT PRESS:

WhatsApp CHANNEL

https://whatsapp.com/channel/0029VaAtNxX8fewmiFmN7N22

![]()

TWITTER (CLICK HERE)

https://twitter.com/IpIndependent

FACEBOOK (CLICK HERE)

https://web.facebook.com/ipindependent

Think your friends would be interested? Share this story!